Why Deep Research Breaks Traditional RAG Systems

Today’s AI agents issue hundreds of retrievals per session to investigate complex topics, ground insights with evidence, and generate reliable, contextual answers. This is beyond the capabilities of conventional retrieval stacks, which fall short in production because:

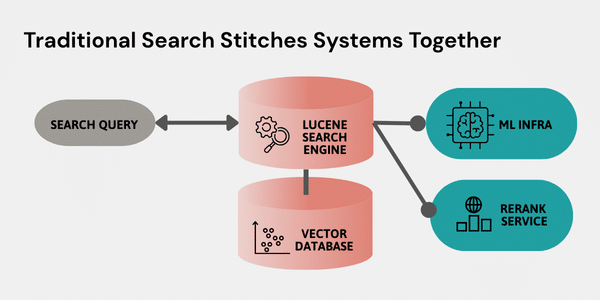

- Rely on external services for ML reranking, slowing responses, and increasing cost

- Break under real-time update requirements with no live indexing or ingestion

- Can’t join structured and unstructured data on-the-fly

- Suffer latency spikes and throughput drops under multi-hop retrieval