Perplexity: Where Knowledge Begins

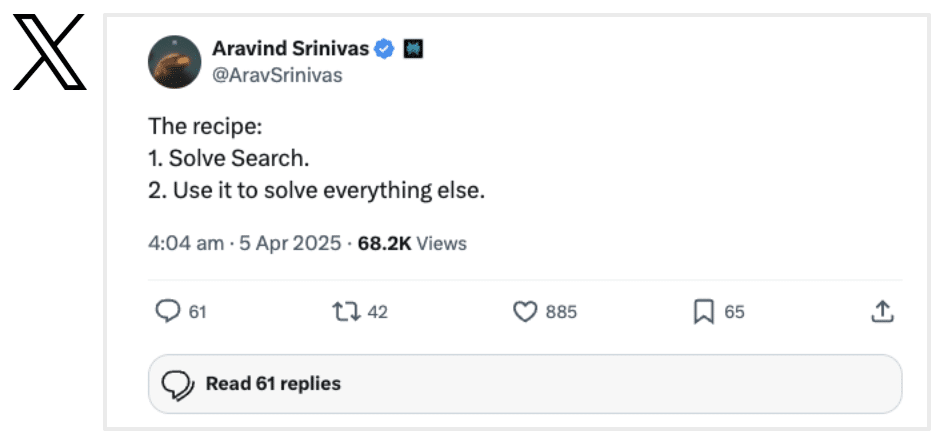

Perplexity has quickly become one of the most innovative players in generative AI. By combining large language models (LLMs) with real-time retrieval, Perplexity delivers accurate, conversational answers that are cited and sourced at web scale. This experience stands apart from traditional search engines by giving users a transparent way to find trusted information.

By May 2025, Perplexity reported 22 million active users and 780 million monthly queries, driven by demand for fast and reliable answers across public and private data sources.