Vespa.ai enables business leaders to scale AI-powered experiences across the enterprise. By unlocking insights from internal data—including millions of documents—and powering real-time, relevant interactions, Vespa helps drive growth, reduce operational costs and risk, and accelerate the return on AI investments.

VP Growth – Drive Engagement with Real-Time Personalization

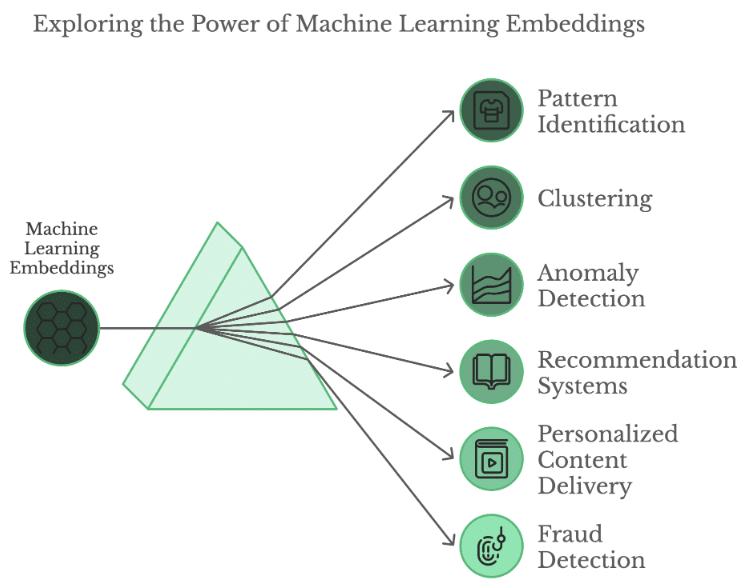

Use Vespa to deploy real-time search, recommendation, and personalization that adapts to every customer interaction. Drive higher engagement and conversion rates while creating more effective, data-driven campaigns. With Vespa’s efficient infrastructure, your team can move faster, optimize budget, and continuously refine customer journeys.

Head of Customer Support – Boost Satisfaction While Reducing Cost-to-Serve

Use Vespa’s Retrieval-Augmented Generation (RAG) to scale GenAI-powered support across your organization’s data and documents—without increasing headcount. Reduce support costs through intelligent automation, enable faster issue resolution, and empower agents with real-time access to relevant information.

VP of Product – Design Better Products

Leverage Vespa Retrieval-Augmented Generation (RAG) to accelerate innovation and deliver smarter, compliant financial products. Unlock insights from your internal data and documents, streamline product development, and personalize user experiences—without compromising control or accuracy.

Chief Risk Officer (CRO) – Maintain a Proactive, Well-Informed Risk Posture

Use Vespa’s Retrieval-Augmented Generation (RAG) to apply GenAI securely across your internal data and documents. Identify emerging risks faster, streamline compliance workflows, and support confident decision-making with real-time access to policies, reports, and regulatory updates.

Chief Information Security Officer (CISO) – Strengthen Security Awareness and Response

Apply Vespa’s Retrieval-Augmented Generation (RAG) to deploy GenAI securely across your organization’s threat intelligence, incident logs, and policy documents. Surface relevant insights in real time, accelerate threat detection and response, and improve access to security protocols—without compromising control or data privacy.

Head of Wealth Advisory – Deliver More Personalized, Scalable Client Experiences

Use Vespa’s Retrieval-Augmented Generation (RAG) and real-time personalization to apply GenAI across client portfolios, market research, and financial product documentation. Equip advisors with intelligent recommendations, automate tailored content delivery, and scale one-to-one experiences—while maintaining accuracy, compliance, and a high-touch advisory model.

VP of People Operations – Improve Access to HR Knowledge and Insights

Power enterprise search across policies, benefits, onboarding materials, and employee feedback in a highly controlled manner. Use Vespa to simplify information retrieval, allowing teams to instantly find accurate, up-to-date information, reduce repetitive HR inquiries, and support more informed decision-making across the organization.