Elasticsearch vs. Vespa Resource Page

The Elasticsearch Alternative: A Performance Benchmark

Discover how an Elasticsearch alternative like Vespa.ai outperforms Elasticsearch in speed, scalability, and cost-efficiency—with up to 12.9x faster vector search and 5x lower infrastructure costs. Access other resources below.

Welcome to the Elasticsearch vs. Vespa Resource Page

Here, you’ll find key resources to help you modernize enterprise search and move beyond Elasticsearch’s cost and complexity with a more efficient Vespa alternative.

What’s Included:

- Benchmark Report – A reproducible performance comparison between Vespa and Elasticsearch for an e-commerce search application with 1 million products.

- Benchmark Executive Summary – An ungated summary of the full Benchmark Report

- Customer Story: Vinted – Learn how Vinted replaced Elasticsearch with Vespa.

- Vinted Presentation: Recording from the eCommerce webinar.

- GigaOm CxO Brief: Migrating to AI-Native Search and Data Serving Platforms

- Modernizing Enterprise Search – This Manager’s Guide outlines the key considerations for organizations looking to modernize enterprise search by migrating from Elasticsearch to Vespa.ai

- Six Benefits of Switching From Elasticsearch to Vespa – one page summary.

- Vespa Data Management – A detailed guide on high-performance data management.

- Migration Brief – A roadmap to migrating from Elasticsearch to Vespa.

The Limits of Elasticsearch

Performance bottlenecks

Slower query speeds as datasets grow

Scalability issues

Complex shard configurations, costly infrastructure

High operational costs

Resource-heavy indexing & maintenance

Vespa eliminates these challenges through a dynamic, automated, and scalable architecture. Its dynamic data distribution removes the need for static shard allocation, while built-in automatic data rebalancing ensures smooth scaling with minimal downtime. This approach reduces operational complexity, enabling businesses to scale effortlessly while maintaining high availability and performance—even as data and workloads grow.

- 12.9x higher throughput for vector search

- 8.5x faster hybrid queries

- 6.5x better performance for lexical searches

- 4x more efficient for real-time updates

- Handles 1 billion+ searchable items

- 2.5x lower query latency than Elasticsearch

- Up to 5x reduction in infrastructure costs

- Simplified architecture = fewer servers, less complexity

When Europe’s largest second-hand marketplace transformed search with Vespa.ai

50% Reduction in Servers. 2.5x Faster Search Latency. 3x Faster Indexing Latency. Brought down delays from 300 seconds to just 5 seconds.

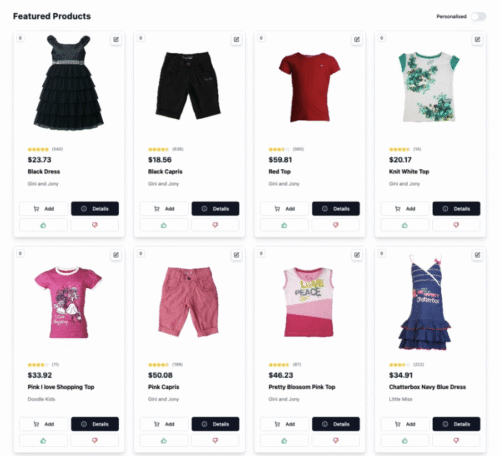

Smarter Product Discovery with Real-Time AI

See how Vespa uses tensors, semantic search, and real-time personalization to power intelligent, business-aware eCommerce experiences.

Why Vespa.ai?

AI-Native Search Engine: Built for vector search, lexical search & hybrid search

Real-Time Scalability: No complex shard rebalancing, seamless scaling

Lower Costs: Fewer nodes, better efficiency, and reduced maintenance overhead

Proven Success: Vinted reduced infrastructure from 6 Elasticsearch clusters to 1 Vespa cluster

See the data for yourself. Get the full benchmark report below ↓

Download Benchmark Full Report: Elasticsearch vs Vespa Performance Comparison.

Vespa is designed to handle the demands of enterprise AI applications.

Seamless handling of structured and unstructured data for advanced queries. Real-time performance across massive datasets. Cutting-edge vector and hybrid search technology for modern AI applications. A great Elasticsearch alternative.

-

What performance gains can I expect when switching from Elasticsearch to Vespa.ai?

Vespa.ai offers significant performance improvements over Elasticsearch, providing up to 9x higher query throughput. This includes 5x faster performance for hybrid searches, 9x faster for vector searches, and 3x faster for lexical searches, all while maintaining 2x–6x lower latency. This can result in major cost savings, with some use cases showing up to 5x infrastructure cost reductions.

-

How does Vespa.ai handle real-time data updates compared to Elasticsearch?

Unlike Elasticsearch, which only updates data after the next refresh cycle, Vespa.ai ensures that data is immediately searchable as soon as it’s ingested. This is essential for use cases that require instant updates, such as real-time e-commerce pricing, financial data changes, or live content publishing.

-

Is Vespa.ai more scalable than Elasticsearch?

Yes, Vespa.ai offers superior scalability without the bottlenecks that can affect Elasticsearch. It distributes data into fine-grained buckets for better load balancing, which prevents the uneven shard distribution seen in Elasticsearch. This allows Vespa.ai to scale dynamically, ensuring high availability and predictable performance even at large scales.

-

How does Vespa.ai support AI and machine learning applications?

Vespa.ai is optimized for AI and machine learning, with built-in tensor support that enables real-time personalization, recommendations, and vector-based search. It supports complex ranking and multi-phase retrieval, making it an ideal choice for large-scale AI applications, such as visual search and ColBERT-style retrieval, all without the need for external tools.

-

What makes Vespa.ai easier to manage than Elasticsearch?

Vespa.ai simplifies operations by reducing management overhead. For instance, after migrating to Vespa, Vinted was able to reduce its server count by half, replacing six Elasticsearch clusters with a single Vespa deployment. This not only reduced the infrastructure needed but also improved search consistency and performance, resulting in more relevant search results.

Vespa Platform Key Capabilities

-

Vespa provides all the building blocks of an AI application, including vector database, hybrid search, retrieval augmented generation (RAG), natural language processing (NLP), machine learning, and support for large language models (LLM).

-

Vespa unifies search, recommendation, and personalization in one platform. This streamlined approach reduces complexity, accelerates development, and enables more cohesive, effective solutions—no more siloed thinking.

-

Build AI applications that meet your requirements precisely. Seamlessly integrate your operational systems and databases using Vespa’s APIs and SDKs, ensuring efficient integration without redundant data duplication.

-

Achieve precise, relevant results using Vespa’s hybrid search capabilities, which combine multiple data types—vectors, text, structured, and unstructured data. Machine learning algorithms rank and score results to ensure they meet user intent and maximize relevance.

-

Enhance relevance and personalization by leveraging Vespa’s real-time tensor operations. Go beyond keyword matching with support for vectors from text, images, location, and other complex data sources.

-

Enhance content analysis with NLP through advanced text retrieval, vector search with embeddings and integration with custom or pre-trained machine learning models. Vespa enables efficient semantic search, allowing users to match queries to documents based on meaning rather than just keywords.

-

Search and retrieve data using detailed contextual clues that combine images and text. By enhancing the cross-referencing of posts, images, and descriptions, Vespa makes retrieval more intelligent and visually intuitive, transforming search into a seamless, human-like experience.

-

Ensure seamless user experience and reduce management costs with Vespa Cloud. Applications dynamically adjust to fluctuating loads, optimizing performance and cost to eliminate the need for over-provisioning.

-

Deliver instant results through Vespa’s distributed architecture, efficient query processing, and advanced data management. With optimized low-latency query execution, real-time data updates, and sophisticated ranking algorithms, Vespa actions data with AI across the enterprise.

-

Deliver services without interruption with Vespa’s high availability and fault-tolerant architecture, which distributes data, queries, and machine learning models across multiple nodes.

-

Seamlessly handle increased demand with Vespa’s horizontal and vertical scaling capabilities, adding capacity on-demand to maintain peak performance during high-traffic periods.

-

Bring computation to the data distributed across multiple nodes. Vespa reduces network bandwidth costs, minimizes latency from data transfers, and ensures your AI applications comply with existing data residency and security policies. All internal communications between nodes are secured with mutual authentication and encryption, and data is further protected through encryption at rest.

-

Avoid catastrophic run-time costs with Vespa’s highly efficient and controlled resource consumption architecture. Pricing is transparent and usage-based.