What is RAG?

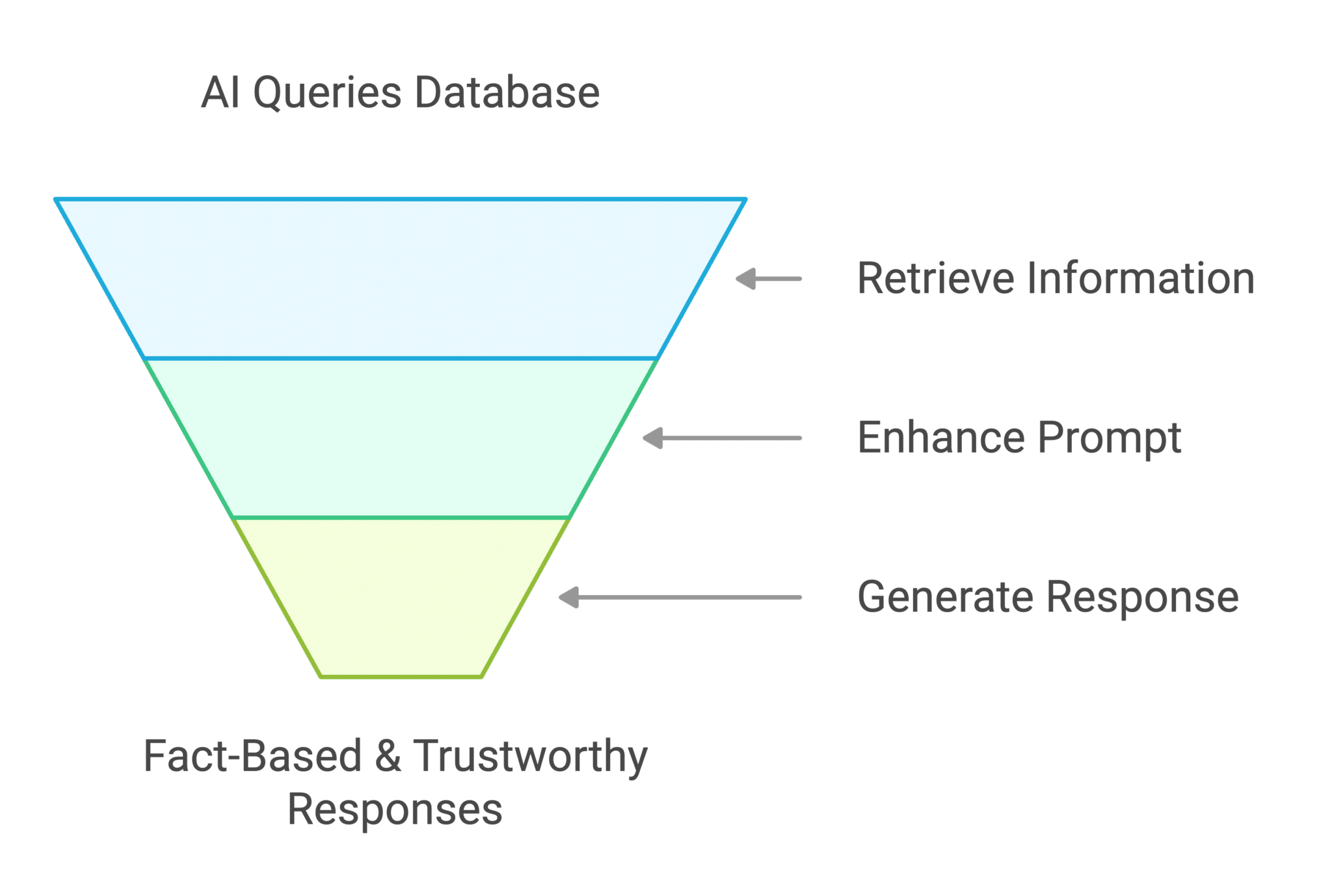

RAG or Retrieval-Augmented Generation is like an “Open-Book Test” for AI.

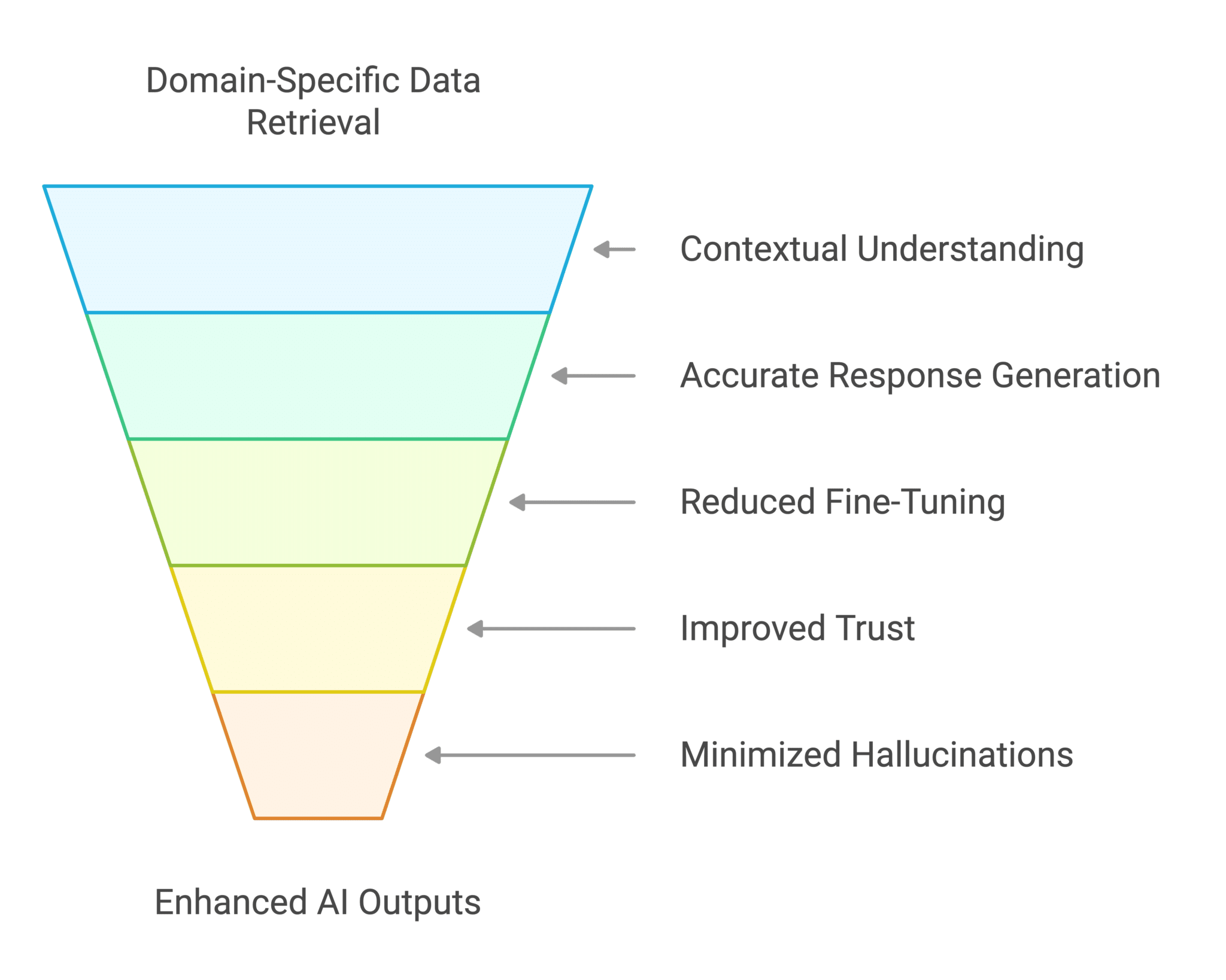

- Instead of guessing, GenAI retrieves relevant, domain-specific data before generating responses.

- The result? More accurate, governed, and context-aware AI outputs.

- RAG reduces fine-tuning efforts, improves trust, and minimizes hallucinations.